What Is The Standard Measurement Of Electricity?

Electricity is the set of physical phenomena associated with the presence and motion of

matter that has a property of electric charge. Electrification is the moment when negative and positive electric charges form leading to electricity. The way electricity works is due to the forces between electric charges.

Standard units for measuring electricity are necessary to ensure we have a common system for monitoring, evaluating and billing for electrical energy. As electricity became more widespread during the late 19th century, scientists and engineers realized the importance of having standardized units. Without standards, confusion would arise and development of electrical devices would be hindered.

Volts

A volt is the standard unit used to measure electrical potential difference, which is the difference in electric potential between two points in a circuit. It quantifies the electromotive force that causes current to flow around an electric circuit. Some common examples of volt levels include:

- 1.5 volts – A standard AA battery

- 3.7 volts – A lithium polymer cell used in smartphones and tablets

- 5 volts – USB power delivery standard

- 12 volts – Most car batteries

- 110/120 volts – Common household mains electricity in North America

- 230 volts – Common household mains electricity in Europe, Asia, Africa, and Australia

Higher volt levels allow more power to be delivered, but can be dangerous. Low volt levels are safer but cannot deliver as much power. The level of volts in a circuit determines the “pressure” at which electricity flows.

Amperes

An ampere (symbol: A) is the standard unit of electric current in the International System of Units (SI). An ampere measures the amount of electric charge flowing past a specific point in an electric circuit per second.

Some examples of common ampere levels:

- A typical AAA battery has a current of around 0.5-3 amps when powering small electronics like flashlights.

- Standard US household electrical outlets provide 15-20 amps for powering appliances, lights, and devices.

- Electric vehicle charging stations often supply 50-150 amps to quickly recharge an EV battery.

- Very large industrial equipment like arc welders use 200-600 amps.

So in everyday electrical terms, amps measure the amount of electric current flowing in a wire or circuit. More amps means more electrons are moving past a point every second, which results in more power.

Ohms

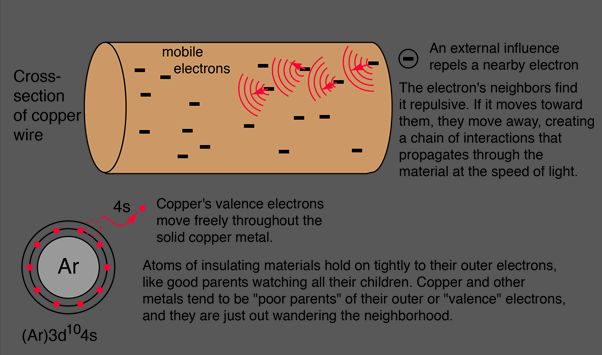

An ohm is a measurement of electrical resistance. Materials with low resistance have more free electrons to conduct electricity, while materials with high resistance have fewer free electrons. The lower the resistance value in ohms, the more readily electricity can flow through a material.

The standard unit of electrical resistance is named after German physicist Georg Ohm. One ohm is defined as the resistance between two points of a conductor when a constant potential difference of one volt, applied between those points, produces a current of one ampere.

Some common resistance values in ohms include:

- 1-100 ohms – Low resistance found in conductors like copper.

- 1,000-100,000 ohms – Medium resistance found in semiconductors.

- Over 1 million ohms – High resistance found in insulators like plastic or ceramic.

Measuring resistance in ohms helps determine how easily electric current can flow through a material. This resistance value is important for designing electrical circuits and components.

Watts

Watts are a measurement of the rate of power or energy transfer. A watt is defined as one joule of energy per second. For electricity, power is the rate at which electricity is being used or generated.

Some common examples of wattage levels:

- A standard light bulb is 60 to 100 watts.

- Larger appliances like refrigerators, washing machines, and electric ovens use 500 to 2000+ watts when running.

- Small electronics like phones and laptops often use 5 to 100 watts.

- Electric cars can use 20,000 to 50,000 watts (20 to 50 kilowatts) to charge.

Wattage indicates the power draw or output of an electrical device or system. Understanding wattage levels helps compare how much electricity different appliances use and determine the capacity needed from an electrical system or generator.

Kilowatt-hours

A kilowatt-hour (kWh) is a unit of energy that represents the amount of electricity consumed over time. It measures electric energy consumption by multiplying power usage (in kilowatts) by the time period used (in hours). For example, a 100-watt light bulb running for 10 hours would consume 1 kWh of electricity (100 watts x 10 hours = 1,000 watt-hours = 1 kWh).

![]()

Kilowatt-hours are commonly used for billing electricity usage. Utilities measure a home or business’ electricity meter in intervals (such as every 15 minutes) to calculate total kWh usage over a billing period, usually one month. The kWh usage is then multiplied by the electric rate to determine the total electric bill. For example, a home that used 600 kWh in a month with an electric rate of $0.15/kWh would receive a $90 electric bill (600 kWh x $0.15/kWh = $90).

Understanding kilowatt-hours can help consumers identify what appliances or behaviors lead to high electricity usage. Knowing the wattage of devices and estimated hourly use can give an approximation of kWh consumption to help manage costs. Kilowatt-hours provide a standardized way to measure electric energy use over time.

History

The standard units used to measure electricity have evolved over centuries as our understanding of electricity advanced. In the 17th and 18th centuries, scientists like Benjamin Franklin, Alessandro Volta, Georg Ohm and André-Marie Ampère conducted pioneering research on electricity and magnetism. Their discoveries laid the foundations for the relationships between voltage, current and resistance.

In the 19th century, as more practical uses for electricity emerged, standards bodies worked to define unified systems of electrical units. The early standardization efforts, like the concept of the ampere, volt and ohm, were crucial precursors to the system we use today.

In the late 19th and early 20th centuries, the International System of Units (SI) was established to standardize measurements across many domains, including electromagnetism. The base SI units of ampere, volt, ohm and watt formalized the measurement system for electricity. Practical units like kilowatt-hours also came into widespread use during the expansion of electric power systems.

While the fundamentals remain similar, the standard electrical units continue to be refined as our measurement capabilities improve. But the origins of the core concepts can be traced back to those pioneering researchers centuries ago.

International System of Units

The International System of Units (SI) is the global standard for measurement today. It was established in 1960 by the General Conference on Weights and Measures and has been updated periodically since then. The SI units for electricity are based on some of the seven base units:

Ampere – The SI unit for electric current is named after French physicist André-Marie Ampère (1775-1836). Electric current is the flow of electric charge over time.

Volt – The volt is named after Italian physicist Alessandro Volta (1745-1827), who invented the first chemical battery. Volts measure electric potential difference and electromotive force.

Ohm – The ohm measures electrical resistance and impedance. It is named after German physicist Georg Ohm (1789-1854), who discovered the relationship between voltage, current, and resistance known as Ohm’s law.

Watt – The watt measures power and is equal to one joule per second. It is named after Scottish engineer James Watt (1736-1819), who helped develop the steam engine.

The SI units for electricity allow precise worldwide standardized measurement in science, industry, and daily life. They honor key pioneers in understanding electricity.

Measuring Electricity

Electricity is measured using various tools and meters. The most common ones are:

Voltmeter – Measures voltage in volts or kilovolts. Used to measure potential difference between two points. Has very high resistance. Connected in parallel across the component.

Ammeter – Measures current in amperes or milliamperes. Has very low resistance. Connected in series in the circuit.

Wattmeter – Measures power in watts. Utilizes both a current coil and a potential coil. Connected in series in the circuit.

Ohmmeter – Measures resistance in ohms. Applies a small voltage and measures resulting current to calculate resistance using Ohm’s law.

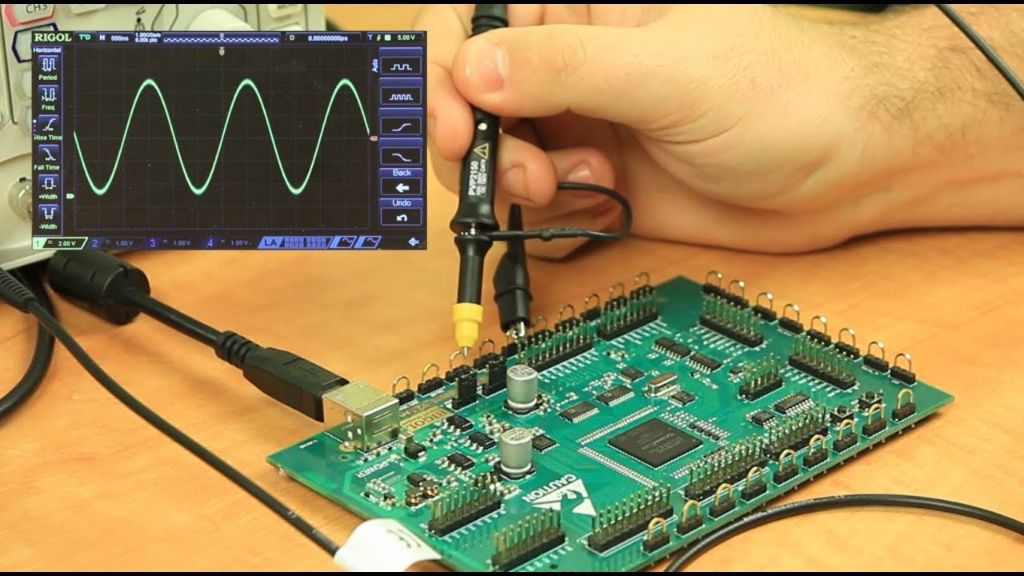

Oscilloscope – Graphically displays voltage over time on an XY plot. Used to visualize waveforms and measure voltage, frequency, time, etc.

Multimeter – Combination meter that can measure volts, amps, ohms, and other parameters. Most common handheld measurement tool.

Accurate measurement helps analyze circuits, troubleshoot issues, monitor usage, calculate power, and ensure electrical safety. Proper tools and techniques are essential.

Conclusion

In summary, electricity is measured using several standard units. Volts measure electric potential or voltage, amperes measure electric current, ohms measure electrical resistance, watts measure electric power, and kilowatt-hours measure electric energy consumption over time. These standardized units allow precise measurement and comparison of electricity globally. They originated from pioneering work in electromagnetism by scientists like Alessandro Volta, André-Marie Ampère, and Georg Ohm.

Today, the International System of Units formally defines units like the volt, ampere, and ohm to ensure uniformity. The precise measurement and common understanding of electricity enabled by these standardized units has been crucial for modern electrical devices, systems, and infrastructure. As we rely more on electricity worldwide, having consistent units of measurement allows electricity to be produced, delivered, and consumed efficiently and safely.

Standard units are essential for electrical engineering, research, and commerce. By providing a common language, they allow sharing of knowledge and coordination across borders and languages. As electricity becomes more important globally, maintaining the standards underpinning electrical measurement remains vital.